I blurted out something odd to my niece the other week, she’s only two years old so doesn’t really understand what I’m saying, but she was sitting there playing with some toys and her dad's iPad (my brother). I said to her you will grow up and never use a keyboard , at the time I was just being geeky and had been reading Songs of a Distant Earth by Arthur C Clarke where a future civilisation didnt really use keyboards as the computers could understand them enough.

However, this thought has stuck with me the last two months and really stepped up a notch last two weeks. The last two weeks I have been on an amazing holiday across Thailand (and Malaysia) and have had time to ponder but also time to see a snippet of the future in action (and not just LED screens on EVERY single surface of every wall). A future where our interaction with our cloud (not devices but rather our own web) is not reactive but preemptive, not single input (the keyboard) but omni-input (every bit of data we feed into it, actively or passively).

A narrative of my preemptive cloud experience

Leading into the trip, Inbox by Gmail , automatically loaded up all of my trip into one email thread, and would highlight the next relevant items for me (such as a flight booking) as they came closer. Google calendar also went through my inbox and added things to my calendar, it did get one booking wrong (it added a booking for our friends that I had emailed to myself) luckily I double checked and removed that calendar item.

While in Thailand — every day I would load up my Google app and based on my location and some recent searches it would show me the weather for the city I was in, the time it was back home in Australia and sprinkle some local news for the country I was in (the news was not helpful but I guess it was relevant) all without being asked explicitly to do so.

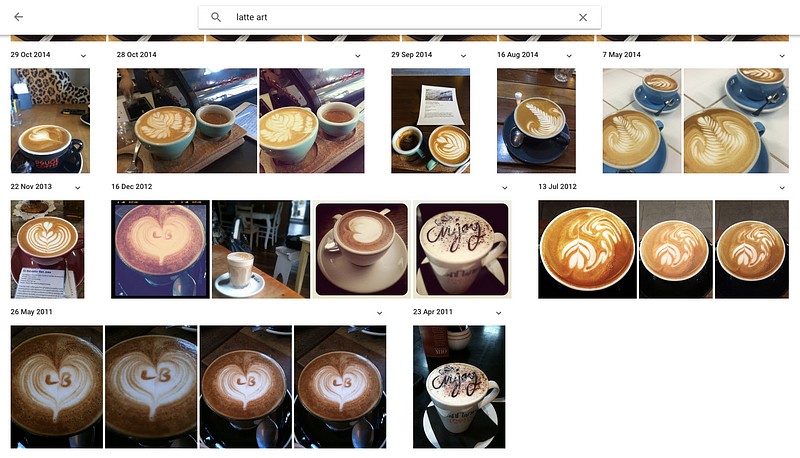

I, of course, use Google Photos throughout and it knew the location/time/faces in my photos. I love this as I can search for things in the photos which came in handy when I was trying to search for specific things amongst a ridiculous amount of photos.

The day after my trip had ended, the app had automatically created an album of our visit to Thailand. I had to filter out a few photos which were repeats, or just random screenshots but this was ready to send to my family, again without me even asking for it. Check out the album here .

I cannot help but reason these things through, maybe because I understand how some of it works but I am still amazed at how passively this mostly ‘just works’. Some could also argue that this preemptive interaction is much simpler as the date range was fixed, locations were ‘foreign’ and all of my services related to this interaction being hosted by Google, however this is just the start.

What about typing?

I wrote the first rough draft of this entirely by voice dictation thanks to Apple/Siri’s dictation feature . Nearly six hundred words without actually typing a single character, it is reasonably accurate and the only pain was the iPhone requires you to start dictating again after about two minutes of speech. Google Docs also added a fully online speech recognition and document interaction features recently Info .

Personally I have grown up typing on a QWERTY keyboard and have been able to touch type for about seventeen years and can type as fast as I can talk plus feel confident getting my thoughts ‘onto digital paper’ when needed. The next generation will be different, they won’t need to interact the same way I do as it will be a completely new paradigm of interaction that will get better and better.

Regarding speech recognition — consider these two quotes from Andrew Ng

“In five years, we think 50 percent of queries will be on speech or images,” source